Microsoft just tried to sell us a feature straight out of a dystopian sci-fi script, and the reaction was exactly what you’d expect: a massive, collective “nope.” The feature is called Recall, part of their new Copilot+ PCs, and it’s designed to give your computer a photographic memory. It works by taking screenshots of your active screen every few seconds, creating a searchable, visual timeline of literally everything you’ve ever done. Sounds innovative, right? Or does it sound like the single greatest privacy and security disaster waiting to happen? If you guessed the latter, congratulations, you have better instincts than a multi-trillion dollar corporation.

What in the Black Mirror is Microsoft Recall?

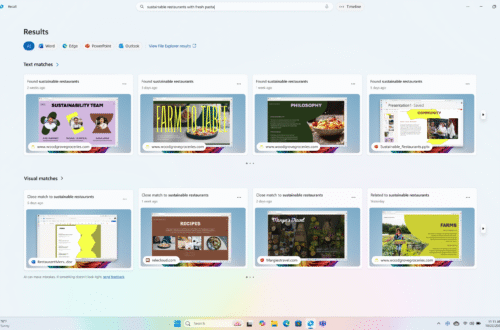

Let’s break down the pitch versus the reality. The pitch from Microsoft is simple: with Recall, you’ll never lose anything on your PC again. Can’t remember that one website you visited last week with the perfect recipe? Just type “chocolate chip cookie recipe” into Recall, and it’ll scrub through its visual history to find the exact moment you were looking at it. Forget the name of a document you were working on? Describe its contents, and Recall will pull up the snapshot. It’s a powerful concept, powered by AI running locally on your device’s Neural Processing Unit (NPU), a specialized chip designed for AI tasks.

The tech giant insisted it was all private & secure because the data stays on your device. The Snapshots, which can quickly add up to a significant chunk of your hard drive (it requires a minimum of 50 GB of storage, with 25 GB allocated for the snapshots), are supposed to be for your eyes only. The problem? “Local” doesn’t mean “safe.” Not even close. Especially when the implementation is so full of holes it looks like Swiss cheese.

The Security Dumpster Fire Everyone Saw Coming

It took security researchers approximately five minutes to tear Recall apart. Almost immediately after it was announced, experts warned that creating a centralized database of a user’s entire digital life was a catastrophically bad idea. They were right. Security researcher Kevin Beaumont publicly demonstrated just how insecure the initial version was. Here’s the horrifying breakdown:

- Plaintext Database: The data Recall collected was stored in a simple SQLite database. For anyone not in the know, that’s a lightweight, file-based database. The kicker? It was stored in plaintext. Unencrypted. Anyone with user-level access to the PC, including common info-stealing malware, could simply copy this file and have a record of everything you’ve ever seen or typed on your computer.

- Everything is Logged: We mean *everything*. Deleted WhatsApp messages? Still in the screenshots. Financial info you typed into a web form? It’s there. Passwords viewed in a password manager or accidentally revealed in a text field? Yep, captured. Fleeting notifications with sensitive two-factor authentication codes? You bet.

Beaumont built a simple tool that could extract and display a user’s entire Recall history in seconds. It was a spectacular own-goal for Microsoft. They essentially built a perfect surveillance tool and handed the keys to any hacker who could successfully phish a user. The UK’s data watchdog, the Information Commissioner’s Office (ICO), quickly announced it was making enquiries with Microsoft, stating they expect tech firms to be “upfront with users about how their data is used” and to ensure security is baked in from the start. Whoops.

Microsoft’s Frantic Backpedaling

Faced with a tidal wave of criticism from security experts, privacy advocates, & the general public, Microsoft did what any company does after a massive public blunder: they panicked and promised to fix it. Just before the Copilot+ PCs were set to launch, they announced some major changes. Originally, Recall was going to be enabled by default. You would have had to know it existed and actively go into your settings to turn it off. That’s a dark pattern if we’ve ever seen one.

After the backlash, Microsoft reversed course. In a blog post that screamed “damage control,” they announced three key changes:

- It’s Now Opt-In: Thank goodness. Recall will be turned off by default, and you’ll have to explicitly choose to enable it during the setup process. This is the single most important change they made.

- Windows Hello Required: To enable Recall, you’ll need to enroll in Windows Hello (facial recognition, fingerprint, or PIN). This is also required to view your timeline, adding a layer of authentication before someone can casually browse your digital past.

- Better Encryption: The database will now be encrypted and decrypted “just-in-time” using Windows Hello Enhanced Sign-in Security (ESS). This means the data is only decrypted when you authenticate.

Are these changes enough? They’re certainly a massive improvement over the initial train wreck. Making it opt-in is non-negotiable, and the added encryption is what should have been there from day one. But it doesn’t change the fundamental nature of Recall. You’re still creating a comprehensive log of your activity that, if compromised, is devastating. Malware that gains administrative privileges could still potentially bypass these protections. The feature is still a massive, juicy target for attackers.

Your Actionable Guide to Dealing with Recall

So, you’re thinking about getting one of these new Copilot+ PCs. What should you do? It’s pretty straightforward.

Just Say No

When you’re setting up your new PC, you will be asked if you want to turn on Recall. The answer is no. Unless you have a very specific, critical need for this kind of functionality and fully understand the risks, the potential for disaster far outweighs the convenience. The ability to find that one funny meme you saw three weeks ago isn’t worth exposing your entire digital life.

Understand the Hardware

Right now, Recall is exclusive to Copilot+ PCs, which are defined by having a powerful NPU capable of over 40 trillion operations per second (TOPS). If your current PC doesn’t have this specific hardware, you won’t be getting Recall. This is a good thing. But be aware that this is likely the direction the industry is heading, so stay vigilant.

Scrutinize All “AI” Features

Recall is a wake-up call. Companies are racing to cram “AI” into every product, often without thinking through the consequences. Use this as a lesson. When your phone, OS, or favorite app introduces a new AI feature, ask yourself these questions:

- What data is this feature collecting?

- Where is it being stored & processed (locally or in the cloud)?

- How is it secured?

- Do I have control over it? Can I easily turn it off & delete the data?

Don’t just blindly accept new features. The hype is a marketing tool; your privacy is not.

The Bigger Picture: AI’s Trust Problem

The Recall saga isn’t just about one bad feature. It’s a symptom of a much larger disease in the tech industry: a profound disconnect from user reality & a cavalier attitude toward privacy. Microsoft engineers likely thought this was a brilliant technical achievement. They failed to ask the most important question: “Should we build this?”

This incident has severely damaged trust in Microsoft’s AI ambitions. How can users trust Copilot to handle their data responsibly when the same company thought default-on screen recording was a good idea? It violates a core tenet of data ethics: data minimization, the principle of collecting only the data necessary for a specific purpose. Recall is the polar opposite. It’s data maximization, collecting everything just in case.

As AI becomes more integrated into our operating systems, we’re at a crossroads. We can demand technology that respects us, that is secure by design, and that puts us in control. Or we can sleepwalk into a future where our devices are constantly watching, recording, and analyzing us, all under the guise of “convenience.” Microsoft’s Recall showed us what that future looks like, and for a moment, everyone saw it for the privacy nightmare it is. Let’s not forget it.